That’s good news. Odds are that some of those people will go back to closed media platforms after ~2 months; but the ones who stay help Mastodon and the Fediverse to grow.

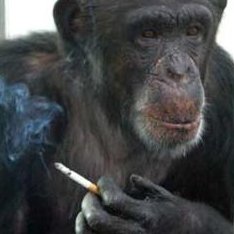

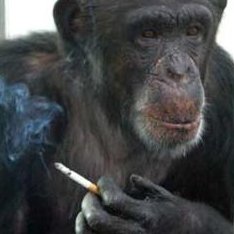

The catarrhine who invented a perpetual motion machine, by dreaming at night and devouring its own dreams through the day.

That’s good news. Odds are that some of those people will go back to closed media platforms after ~2 months; but the ones who stay help Mastodon and the Fediverse to grow.

Federation woes?

Your comment has a different take though, and adding value to the discussion, it isn’t just the same as I said. Both are complementary.

Even more accurately: it’s bullshit.

“Lie” implies that the person knows the truth and is deliberately saying something that conflicts with it. However the sort of people who spread misinfo doesn’t really care about what’s true or false, they only care about what further reinforces their claims or not.

When it comes to how people feel about AI translation, there is a definite distinction between utility and craft. Few object to using AI in the same way as a dictionary, to discern meaning. But translators, of course, do much more than that. As Dawson puts it: “These writers are artists in their own right.”

That’s basically my experience.

LLMs are useful for translation in three situations:

Past that, LLM-based translations are a sea of slop: they screw up with the tone and style, add stuff not present in the original, repeat sentences, remove critical bits, pick unsuitable synonyms, so goes on. All the bloody time.

And if you’re handling dialogue, they will fuck it up even in shorter excerpts, by making all characters sound the same.

How much time until this bot gets banned by “harassment”?

Predictable outcome for anyone not wallowing in wishful belief.

Easier: n(13-n).

I have never met a person who can isolate the moment when Tucker Carlson became Alex Jones. So, where did it come from exactly? …it’s very clear to me both are demons.

Yeah. I got a leg scar from a domestic cat that I’ve raised from kittendom, who’d easily have ripped my face if she could reach it*. A wild, larger, and more powerful version of that seems like a bad idea.

*because I was holding a kitten that she never saw before. Yup. Fuck you Kika, I love you but you’re a bloody arsehole.

Yup, I got that you don’t mean that everyone is a bot there. I just don’t think that there aren’t so many of them as you’re saying; it’s certainly not as much as half the users, or even the activity (bots tend to be more active than actual users).

They’re still wrecking damage on the place though. Eventually they’ll reach a plateau in proportion, but their numbers will go down, alongside the actual users.

For real. Companies being extra pushy with their product always makes me picture their decision makers saying:

“What do you mean, «we’re being too pushy»? Those are customers! They are not human beings, nor deserve to be treated as such! This filth is stupid and un-human-like, it can’t even follow simple orders like «consume our product»! Here we don’t appeal to its reason, we smear advertisement on its snout until it needs to open the mouth to breath, and then we shove the product down its throat!”

Is this accurate? Probably not. But it does feel like this, specially when they’re trying to force a product with limited use cases into everyone’s throats, even after plenty potential customers said “eeew no”. Such as machine text and image generation.

The insertion of an all knowing checker who could have written it himself anyway

The checker does make all the difference, but he doesn’t need to be able to write it by himself. It could be even a brainless process, such as natural selection.

I think the point is less about any kind of route to Hamlet, and more about the absurdity of infinite tries in a finite space(time).

I know. It’s just that creationists misuse that metaphor so often that I couldn’t help but share my brainfart here.

Bots are parasites: they only thrive if the host population is large enough to maintain them. Once the hosts are gone, the parasites are gone too.

In other words: botters only bot a platform when they expect human beings to see and interact with the output of their bots. As such they can never become the majority: once they do, botting there becomes pointless.

That applies even to repost bots - you could have other bots upvoting the repost, but you won’t do it unless you can sell the account to an advertiser, and the advertiser will only buy it if they can “reach” an “audience” (i.e. spam humans).

Shorthand for third language [English] speaker. I mean that I’m prone to switch a few words here and there, due to other languages interfering inside my head.

This sounds familiar, almost as if history could perhaps, maybe, just possibly… repeat itself? Nah! (says spez)

Digg, right? Yeah. Perhaps spez even knew that it would repeat, but was smart (and shitty) enough to jump off the ship before it happened.

Perhaps even worse: Wobblesticke, Jiggleweapone, stuff like this.

If we’re considering even chimps “monkeys”, there’s already eight billion of them, I think that’s enough.

I am not sure, but I believe that this political abuse is further reinforced by something not mentioned in the text:

It’s breeding grounds for witch hunting: people don’t get why someone said something, they’re dishonest so they assume why, they bring on the pitchforks because they found a witch. And that’s bound to affect anyone voicing anything slightly off the echo chamber.

And I think that this has been going on for years; cue to “the Twitter MC of the day”. This predates Musk, but after Musk took over he actually encouraged the witch hunts for his own political goals.